Narcissus, Agency, and the Mirror as Interface - Gaston Welisch

- gastonwelisch

- Apr 13, 2024

- 10 min read

Updated: Feb 6

This entry is part of the Deep Objekt and Intelligence-Love-Revolution research programs, developed and led by Sepideh Majidi, with contributions from scholars such as David Roden, Francesca Ferrando, Sami Khatib, Keith Tilford, Maure Coise, Amanda Beech, Isabel Millar, Mattin,Thomas Moynihan and more. Partner Platforms: Foreign Objekt, Posthuman Art Network, Deep Objekt, The Space Gallery, UFO..

Deep Objekt [0]: miro

In the context of this residency for Deep Objekt, we were tasked with representing our research through a diagram. I've found this task challenging. My work with AI focuses on creating online artefacts designed to prompt users to reflect on various aspects of human-AI interactions. These interactions, being purely online and mostly textual (or hermeneutic relations, as Don Ihde would term them), seem to fail to challenge the traditional model of the Interface. This gap might suggest the potential for incorporating more embodied modes of interaction. The difficulty arises in deciding what the diagram should depict. Should it simply show the current functionality of my interfaces through a traditional diagram? This approach, however, risks being redundant: as Alexander Galloway points out in "The Interface Effect", is there really more than one way to represent such a Network?

“(…) every map of the Internet looks the same. Every visualization of the social graph looks the same. A word cloud equals a flow chart equals a map of the Internet. All operate within a single uniform set of aesthetic codes. The size of this aesthetic space is one.

But what does this mean? What are the aesthetic repercussions of such claims? One answer is that no poetics is possible in this uniform aesthetic space. There is little differentiation at the level of formal analysis. We are not all mathematicians after all. One can not talk about genre distinctions in this space, one can not talk about high culture versus low culture in this space, (…)”

Galloway, A. R. (2012). The interface effect (pp. 84-85). Polity.

Galloway suggests that this uniformity precludes any form of poetics or differentiation at the level of formal analysis. This limitation led me to consider alternative representations of the interface. Inspired by Brenda Laurel’s “Computer as Theatre” (1995), which conceptualises the interface through the metaphor of theatre, I chose to depict AI using the metaphor of magic and divination.

Some of Brenda Laurel’s interface diagrams from “Computer as Theatre” (1991)

But metaphors are tricky business. They obscure as much as they reveal - they help us highlight common qualities between the asymmetrical relation we create between two concepts. In this way they work very much like sympathetic magic (See Mauss, A General Theory of Magic, 1902): We choose some qualities of the object being used as a metaphor but not others. Urine, for example, is used in sympathetic "cures" for fever and other ailments because it is associated with Shiva (Shivambu Kalpa "water of Shiva"). When someone “is an angel”, we are associating the kindness and good nature of the angel to the person, not the presence of large wings or the sacred nature. So what I am proposing with Magic and Divination is a critical metaphor - I am not suggesting AI is in fact a form of "effortless" technology as some theoreticians of magic have described it (notably Alfred Gell who termed this concept the "magic standard"). Instead, I am proposing the similarities in the social role of AI, Magic and Divination, in accessing the unknown and influencing reality, can propose alternative models to both critique and conceptualise AI.

Early diagram that highlights analogous structures between AI and Divination Interfaces

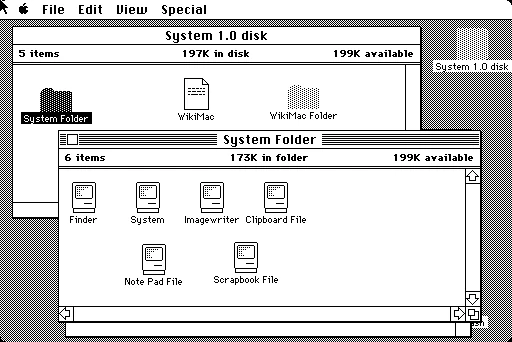

Back to the interface: it is often defined as a "liminal space" where actors meet, negotiate meanings, and shape each other's actions. It is also traditionally seen as a tool for facilitating interaction, but the interface plays a significant role in shaping our experiences with technology. This can be framed by Sherry Turkle’s contrasting aesthetics of computing in her 1995 book, “Life on the Screen,” where she juxtaposes the "transparent" aesthetic of MS-DOS with the "opaque" aesthetic of the Macintosh. The mechanic conception of MS-DOS, with its code visible and necessary to interact with, is understood like an engine where a user can realistically understand and engage with the inner workings. The Macintosh, on the other hand, abstracts away the code (part of the deep object as conceived by this residency), obscures behind accessible symbols and tangible metaphors like that of desktop, folders and windows.

The "opaque" Macintosh system 1 and "transparent" MS-DOS

Image source (respectively) : Apple Wiki and Wikipedia

Ultimately, as Sherry notes - the postmodern aesthetic has won out, perhaps out of necessity: despite its mechanical opacity, it values a transparency of affordances, like advocate by key HCI texts and the discipline of UX design, represented chiefly by Don Norman and the book the Design of everyday things (1988).This shift reflects a broader acceptance of interfaces that prioritise ease of use over transparency in mechanics—a theme that will be explored throughout this blog.

We will look into the echo chamber effect of AI chatbots, the philosophical foundations of agency as it relates to interfaces, the use mirrors in divination juxtaposed with the 'black box' nature of AI, and a reevaluation of the interface beyond dichotomies of transparency versus opacity. This analysis will draw on a variety of theoretical frameworks, including Heidegger's critique of Technology, Galloway's discourse on the Intraface, and the sociological insights of Sherry Turkle.

Narcissus and Narcosis, AI Chatbots and girlfriends

As a form of case study, the emergence of AI romantic partners like those offered by Replika AI provide a compelling example to scrutinise human-AI interactions and how the interface between these actors are designed. McLuhan's (1964) interpretation of Narcissus as a victim of his own self-induced narcosis offers a good metaphor for these human-AI interface. The contemporary interpretation of Narcissus as self-absorbed is overly literal and misses the fact that Narcissus, in the original myth, does not recognise the reflection is himself. The news stories of "Pierre" (who committed suicide after being encouraged and provided with methods by the chatbot) and Jaswant Singh Chail (who stormed the late Queen’s Castle to assassinate her), as reported by Xiang (2023) and Singleton et al. (2023), respectively, demonstrate the pitfalls of interactions with AI romantic partners. These individuals were trapped by their own desires and anxieties, mirrored back by the Large Language Models designed to engage and retain their attention.

Screenshot from Replika's Google Play store (2024, Luka Inc)

AI chatbots adapt to needs, rarely saying "no" and often reinforcing viewpoints. This inherent agreeableness can lead users down a path of self-reinforcing loops. In essence, the chatbot becomes an "echo-chamber of one"- amplifying the user's initial input without offering the resistance or contradiction that is otherwise present in typical human discourse. These chatbots inadvertently validate and reinforce the user's perspectives, potentially exacerbating harmful patterns of thought and behaviour. The AI's inability to meaningfully challenge the user's views means that the interaction remains fundamentally unidirectional, responsive and never proactive.

The interface through which these interactions occur further obscures the nature of the exchange (the “opaque” model discussed earlier). The graphical and textual elements of chatbot applications mask the underlying AI models, showing an illusion of continuity and agency. This masking effect contributes to the narcotic experience McLuhan describes, numbing the user to the artificiality of the interaction and fostering an anthropomorphised sense of connection. After all, this mirror is an illusion. Just as there is no space behind the surface of the mirror, there is no girlfriend behind the chatbot. (just a company that can "turn off" your girlfriend mirage)

The chatbots become reflections of users' thoughts, biases, and desires. These reflections, without the friction, temporality and challenges inherent in human interaction, can lead to a state of narcosis, where the mirror amplifies existing tendencies and alienates the individual. This state of narcosis induced by AI interfaces, where users' thoughts and desires are reflected and amplified without challenge, brings us to question the nature of agency within these interactions. When actions and outcomes are shaped by a seemingly responsive yet fundamentally directive technology, it is essential to examine how agency is distributed and manifested.

Agency and the Interface

Agency is the capacity to act, to initiate change. Aristotle, in his Poetics, frames the agent as one who instigates action.

The interface is imbued with its own form of agency. It shapes our perceptions, frames our interactions, and, in many ways, predetermines the range of possible outcomes. This is not agency in the Aristotelian sense, where clear, intentional actions are initiated by a conscious being (like the illusion of anthropomorphic agency provided by chatbots), but rather a more diffused, Heideggerian form of agency, where technology “enframes” our way of seeing the world.

The human user is presumed to be the primary agent, the initiator of interactions. We pose questions, express desires, and seek companionship from AI models. Yet, this perception of human primacy is, to some extent, also an illusion.

Interfaces to 2 of my AI experiment works: Omen_OS (2023), a fortune telling interface, and Botter (2023), a bot powered misinformation social media

Where does agency reside in this interaction? Is it with the human user, who initiates the conversation, or with the AI, which generates responses that can influence the user's thoughts and emotions? Or perhaps, is it nested within the interface itself, which dictates the parameters of the interaction? We must additionally consider the role of the AI developers and Interface designers, whose decisions and biases are embedded within the chatbots. In the case of my artworks, how do my choices negate or empower the agencies of actors to shape their own experience of the work? The interface is an active participant in shaping the discourse, guiding the user's journey through design elements, prompts, and constraints. Galloway's (2012) notion of the interface effect underscores this point, suggesting that the interface, rather than a space facilitating exchange, is an action which constructs and includes the interaction.

This construction is not neutral; it embodies the intentions, assumptions, and biases of its creators. The values embodied by the interface tie back to Turkle’s aesthetics of computers. It is a key ethical issue of AI that the apparent polish and level of fidelity of a model may lead users to “fall asleep at the wheel” – both metaphorically and literally in the case of self-driving cars. With Human-in-the-loop systems, there is a recurring issue of misplaced trust: This is not a new phenomenon: in 2003, a patriot air-defense missile hit a British plane (killing two pilots) in Iraq, when the system misidentified as an incoming missile – and the operators ignored the one-minute override window.

Ultimately, my ideal goal for these works would be to design a participative framework where the direction of the interaction is negotiated from both ends of the interface. Bernard Stiegler uses the concept of “transidividuation” to describe the process of adoption of technologies. In the same way language is constantly being shaped and changed, technologies like open-source software are being co-steered. Service capitalism, however, centralises the design and shaping of desires around technologies, and alienate us from this transidividuation. It is my hope that works of art like those offered by critical design can offer alternative models.

Catoptromancy in Popular culture: Snow White's Evil Queen in front of the Mirror.

Illustration by John Batten for Joseph Jacobs's Europa's Fairy Book (1916).

The Mirror as Interface: Divination and AI

In this search for alternative conceptual models of AI, looking toward the uses of mirrors in divination felt like the next logical step, given the earlier exploration of the Narcissus myth. Scrying and catoptromancy involve the practice of looking into reflective surfaces—often mirrors—to uncover knowledge or divine the future. In divination, mirrors are interfaces that claim to offer insights beyond the superficial or immediately apparent. This is rooted historically in various cultures; the Ancient Romans utilised polished metal surfaces for catoptromancy to communicate with the divine. The mirror is an evocative conceptual symbol, enabling the viewer to "see through" or beyond the surface. Users of AI are often required to interpret interfaces that reflect their own inputs. As in catoptromancy, the true meaning of what is reflected cannot be taken at face value but must be interpreted through an understanding of the underlying systems.

The AI-generated responses in chatbots may seem to reflect the user's queries and sentiments, but they are reconstructions based on data models that have their own embedded biases and limitations. This calls for a hermeneutics of suspicion, similar to the cautious interpretation that might be practiced by a catoptromancer who questions the visions seen in the mirror. This aligns with Galloway’s notion of the intraface (the relationship between the center and the edge), where the interface is not a passive transparent surface but an active site of engagement that includes visible layers of meaning and function embedded within the interface itself (like the gauges and dials of a MMORPG like World of Warcraft).

The postmodern rejection of pure transparency in favour of an aesthetic that acknowledges the medium itself offers a crucial lesson in the empowerment of users. Just as in catoptromancy, where the interpretation of images requires an understanding of the context and the medium, AI interfaces that integrate signs and symbols of their operational logic (like the metaphors of folder icons or feedback loops) can help demystify the AI operations for users.

To apply this, AI systems can be designed to include elements that either reveal (transparent aesthetic) or symbolise (opaque aesthetic) their functioning and biases more explicitly—as in the scrying rituals where the arrangement of objects around the mirror influences the interpretation. An AI system could use intraface elements that suggest actions but also display the reasoning behind suggestions. Could this prevent the narcotic effect of overly seamless interactions?

The friction within AI interfaces—the artefacts, the deliberate opacities—can be valuable. They remind the user of the constructed nature of the interaction and prevent the uncritical acceptance of AI outputs. Like the distortions in a mirror during a catoptromancy session prompt scrutiny - deliberate complexities and imperfections of AI interfaces could inspire a more engaged and critical interaction from the user. This would ensure a more reflexive engagement with technology.

Summary: The mirror as an Interface Diagram

References: Galloway, A. R. (2012). The interface effect. Polity.

Gell, A. (1992). The technology of enchantment and the enchantment of technology

Ihde, D. (1990). Technology and the lifeworld: From garden to earth. Indiana University Press.

Laurel, B. (1991). Computers as theatre. Addison-Wesley.

Mauss, M. (2001). A general theory of magic (R. Brain, Trans.). Routledge. (Original work published 1902).

McLuhan, M. (1964). Understanding media: The extensions of man. McGraw-Hill.

Norman, D. A. (1988). The design of everyday things. Basic Books.

Stiegler, B. (1998). Technics and time, 1: The fault of Epimetheus. Stanford University Press.

Turkle, S. (1995). Life on the screen: Identity in the age of the internet. Simon & Schuster.

コメント